The Cyborg’s Prosody, or Speech AI and the Displacement of Feeling

In summer 2021, sound artist, engineer, musician, and educator Johann Diedrick convened a panel at the intersection of racial bias, listening, and AI technology at Pioneerworks in Brooklyn, NY. Diedrick, 2021 Mozilla Creative Media award recipient and creator of such works as Dark Matters, is currently working on identifying the origins of racial bias in voice interface systems. Dark Matters, according to Squeaky Wheel, “exposes the absence of Black speech in the datasets used to train voice interface systems in consumer artificial intelligence products such as Alexa and Siri. Utilizing 3D modeling, sound, and storytelling, the project challenges our communities to grapple with racism and inequity through speech and the spoken word, and how AI systems underserve Black communities.” And now, he’s working with SO! as guest editor for this series (along with ed-in-chief JS!). It kicked off with Amina Abbas-Nazari’s post, helping us to understand how Speech AI systems operate from a very limiting set of assumptions about the human voice. Then, Golden Owens took a deep historical dive into the racialized sound of servitude in America and how this impacts Intelligent Virtual Assistants. Last week, Michelle Pfeifer explored how some nations are attempting to draw sonic borders, despite the fact that voices are not passports. Today, Dorothy R. Santos wraps up the series with a meditation on what we lose due to the intensified surveilling, tracking, and modulation of our voices. [To read the full series, click here] –JS

—

In 2010, science fiction writer Charles Yu wrote a story titled “Standard Loneliness Package,” where emotions are outsourced to another human being. While Yu’s story is a literal depiction, albeit fictitious, of what might be entailed and the considerations that need to be made of emotional labor, it was published a year prior to Apple introducing Siri as its official voice assistant for the iPhone. Humans are not meant to be viewed as a type of technology, yet capitalist and neoliberal logics continue to turn to technology as a solution to erase or filter what is least desirable even if that means the literal modification of voice, accent, and language. What do these actions do to the body at risk of severe fragmentation and compartmentalization?

I weep.

I wail.

I gnash my teeth.

Underneath it all, I am smiling. I am giggling.

I am at a funeral. My client’s heart aches, and inside of it is my heart, not aching, the opposite of aching—doing that, whatever it is.

Charles Yu, “Standard Loneliness Package,” Lightspeed: Science Fiction & Fantasy, November 2010

Yu sets the scene by providing specific examples of feelings of pain and loss that might be handed off to an agent who absorbs the feelings. He shows us, in one way, what a world might look and feel like if we were to go to the extreme of eradicating and off loading our most vulnerable moments to an agent or technician meant to take on this labor. Although written well over a decade ago, its prescient take on the future of feelings wasn’t too far off from where we find ourselves in 2023. How does the voice play into these connections between Yu’s story and what we’re facing in the technological age of voice recognition, speech synthesis, and assistive technologies? How might we re-imagine having the choice to displace our burdens onto another being or entity? Taking a cue from Yu’s story, technologies are being created that pull at the heartstrings of our memories and nostalgia. Yet what happens when we are thrust into a perpetual state of grieving and loss?

Humans are made to forget. Unlike a computer, we are fed information required for our survival. When it comes to language and expression, it is often a stochastic process of figuring out for whom we speak and who is on the receiving end of our communication and speech. Artist and scholar Fabiola Hanna believes polyvocality necessitates an active and engaged listener, which then produces our memories. Machines have become the listeners to our sonic landscapes as well as capturers, surveyors, and documents of our utterances.

The past few years may have been a remarkable advancement in voice tech with companies such as Amazon and Sanas AI, a voice recognition platform that allows a user to apply a vocal filter onto any human voice, with a discernible accent, that transforms the speech into Standard American English. Yet their hopes for accent elimination and voice mimicry foreshadow a future of design without justice and software development sans cultural and societal considerations, something I work through in my artwork in progress, The Cyborg’s Prosody (2022-present).

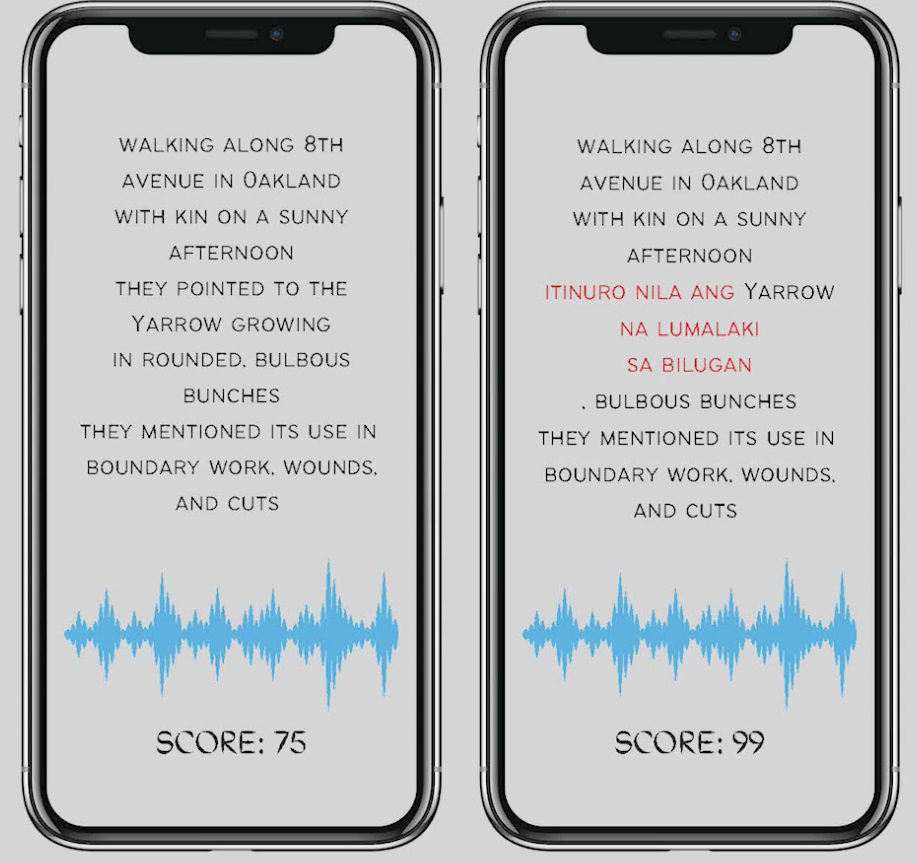

The Cyborg’s Prosody is an interactive web-based artwork (optimized for mobile) that requires participants to read five vignettes that increasingly incorporate Tagalog words and phrases that must be repeated by the player. The work serves as a type of parody, as an “accent induction school” — providing a decolonial method of exploring how language and accents are learned and preserved. The work is a response to the creation of accent reduction schools and coaches in the Philippines. Originally, the work was meant to be a satire and parody of these types of services, but shifted into a docu-poetic work of my mother’s immigration story and learning and becoming fluent in American English.

Even though English is a compulsory language in the Philippines, it is a language learned within the parameters of an educational institution and not common speech outside of schools and businesses. From the call center agents hired at Vox Elite, a BPO company based in the Philippines, to a Filipino immigrant navigating her way through a new environment, the embodiment of language became apparent throughout the stages of research and the creative interventions of the past few years.

In Fall 2022, I gave an artist talk about The Cyborg’s Prosody to a room of predominantly older, white, cisgender male engineers and computer scientists. Apparently, my work caused a stir in one of the conversations between a small group of attendees. A couple of the engineers chose to not address me directly, but I overheard a debate between guests with one of the engineers asking, “What is her project supposed to teach me about prosody? What does mimicking her mom teach me?” He became offended by the prospect of a work that de-centered his language, accent, and what was most familiar to him.The Cyborg’s Prosody is a reversal of what is perceived as a foreign accented voice in the United States into a performance for both the cyborg and the player. I introduce the term western vocal drag to convey the caricature of gender through drag performance, which is apropos and akin to the vocal affect many non-western speakers effectuate in their speech.

The concept of western vocal drag became a way for me to understand and contemplate the ways that language becomes performative through its embodiment. Whether it is learning American vernacular to the complex tenses that give meaning to speech acts, there is always a failure or queering of language when a particular affect and accent is emphasized in one’s speech. The delivery of speech acts is contingent upon setting, cultural context, and whether or not there is a type of transaction occurring between the speaker and listener. In terms of enhancement of speech and accent to conform to a dominant language in the workplace and in relation to global linguistic capitalism, scholar Vijay A. Ramjattan states in that there is no such thing as accent elimination or even reduction. Rather, an accent is modified. The stakes are high when taking into consideration the marketing and branding of software such as Sanas AI that proposes an erasure of non-dominant foreign accented voices.

The biggest fear related to the use of artificial intelligence within voice recognition and speech technologies is the return to a Standard American English (and accent) preferred by a general public that ceases to address, acknowledge, and care about linguistic diversity and inclusion. The technology itself has been marketed as a way for corporations and the BPO companies they hire to mind the mental health of the call center agents subjected to racism and xenophobia just by the mere sound of their voice and accent. The challenge, moving forward, is reversing the need to serve the western world.

A transorality or vocality presents itself when thinking about scholar April Baker-Bell’s work Black Linguistic Consciousness. When Black youth are taught and required to speak with what is considered Standard American English, this presents a type of disciplining that perpetuates raciolinguistic ideologies of what is acceptable speech. Baker-Bell focuses on an antiracist linguistic pedagogy where Black youth are encouraged to express themselves as a shift towards understanding linguistic bias. Deeply inspired by her scholarship, I started to wonder about the process for working on how to begin framing language learning in terms of a multi-consciousness that includes cultural context and affect as a way to bridge gaps in understanding.

Or, let’s re-think this concept or idea that a bad version of English exists. As Cathy Park Hong brilliantly states, “Bad English is my heritage…To other English is to make audible the imperial power sewn into the language, to slit English open so its dark histories slide out.” It is necessary for us all to reconfigure our perceptions of how we listen and communicate that perpetuates seeking familiarity and agreement, but encourages respecting and honoring our differences.

—

Featured Image: Still from artist’s mock-up of The Cyborg’s Prosody(2022-present), copyright Dorothy R. Santos

—

Dorothy R. Santos, Ph.D. (she/they) is a Filipino American storyteller, poet, artist, and scholar whose academic and research interests include feminist media histories, critical medical anthropology, computational media, technology, race, and ethics. She has her Ph.D. in Film and Digital Media with a designated emphasis in Computational Media from the University of California, Santa Cruz and was a Eugene V. Cota-Robles fellow. She received her Master’s degree in Visual and Critical Studies at the California College of the Arts and holds Bachelor’s degrees in Philosophy and Psychology from the University of San Francisco. Her work has been exhibited at Ars Electronica, Rewire Festival, Fort Mason Center for Arts & Culture, Yerba Buena Center for the Arts, and the GLBT Historical Society.

Her writing appears in art21, Art in America, Ars Technica, Hyperallergic, Rhizome, Slate, and Vice Motherboard. Her essay “Materiality to Machines: Manufacturing the Organic and Hypotheses for Future Imaginings,” was published in The Routledge Companion to Biology in Art and Architecture. She is a co-founder of REFRESH, a politically-engaged art and curatorial collective and serves as a member of the Board of Directors for the Processing Foundation. In 2022, she received the Mozilla Creative Media Award for her interactive, docu-poetics work The Cyborg’s Prosody (2022). She serves as an advisory board member for POWRPLNT, slash arts, and House of Alegria.

—

REWIND! . . .If you liked this post, you may also dig:

Your Voice is (Not) Your Passport—Michelle Pfeifer

“Hey Google, Talk Like Issa”: Black Voiced Digital Assistants and the Reshaping of Racial Labor–Golden Owens

Beyond the Every Day: Vocal Potential in AI Mediated Communication –Amina Abbas-Nazari

Voice as Ecology: Voice Donation, Materiality, Identity–Steph Ceraso

The Sound of What Becomes Possible: Language Politics and Jesse Chun’s 술래 SULLAE (2020)—Casey Mecija

Look Who’s Talking, Y’all: Dr. Phil, Vocal Accent and the Politics of Sounding White–Christie Zwahlen

Listening to Modern Family’s Accent–Inés Casillas and Sebastian Ferrada

Your Voice is (Not) Your Passport

In summer 2021, sound artist, engineer, musician, and educator Johann Diedrick convened a panel at the intersection of racial bias, listening, and AI technology at Pioneerworks in Brooklyn, NY. Diedrick, 2021 Mozilla Creative Media award recipient and creator of such works as Dark Matters, is currently working on identifying the origins of racial bias in voice interface systems. Dark Matters, according to Squeaky Wheel, “exposes the absence of Black speech in the datasets used to train voice interface systems in consumer artificial intelligence products such as Alexa and Siri. Utilizing 3D modeling, sound, and storytelling, the project challenges our communities to grapple with racism and inequity through speech and the spoken word, and how AI systems underserve Black communities.” And now, he’s working with SO! as guest editor for this series (along with ed-in-chief JS!). It kicked off with Amina Abbas-Nazari’s post, helping us to understand how Speech AI systems operate from a very limiting set of assumptions about the human voice. Last week, Golden Owens took a deep historical dive into the racialized sound of servitude in America and how this impacts Intelligent Virtual Assistants. Today, Michelle Pfeifer explores how some nations are attempting to draw sonic borders, despite the fact that voices are not passports.–JS

—

In the 1992 Hollywood film Sneakers, depicting a group of hackers led by Robert Redford performing a heist, one of the central security architectures the group needs to get around is a voice verification system. A computer screen asks for verification by voice and Robert Redford uses a “faked” tape recording that says “Hi, my name is Werner Brandes. My voice is my passport. Verify me.” The hack is successful and Redford can pass through the securely locked door to continue the heist. Looking back at the scene today it is a striking early representation of the phenomenon we now call a “deep fake” but also, to get directly at the topic of this post, the utter ubiquity of voice ID for security purposes in this 30-year-old imagined future.

In 2018, The Intercept reported that Amazon filed a patent to analyze and recognize user’s accents to determine their ethnic origin, raising suspicion that this data could be accessed and used by police and immigration enforcement. While Amazon seemed most interested in using voice data for targeting users for discriminatory advertising, the jump to increasing surveillance seemed frighteningly close, especially because people’s affective and emotional states are already being used for the development of voice profiling and voice prints that expand surveillance and discrimination. For example, voice prints of incarcerated people are collected and extracted to build databases of calls that include the voices of people on the other end of the line.

“Collect Calls From Prison” by Flickr User Cobalt123 (CC BY-NC-SA 2.0)

What strikes me most about these vocal identification and recognition technologies is how their appeal seems to lie, for advertisers, surveillers, and policers alike that voice is an attractive method to access someone’s identity. Supposedly there are less possibilities to evade or obfuscate identification when it is performed via the voice. It “is seen as a solution that makes it nearly impossible for people to hide their feelings or evade their identities.” The voice here works as an identification document, as a passport. While passports can be lost or forged, accent supposedly gives access to the identity of a person that is innate, unchanging, and tied to the body. But passports are not only identification documents. They are also media of mobility, globally unequally distributed, that allow or inhibit movement across borders. States want to know who crosses their borders, who enters and leaves their territory, increasingly so in the name of security.

What, then, when the voice becomes a passport? Voice recognition systems used in asylum administration in the Global North show what is at stake when the voice, and more specifically language and dialect, come to stand in for a person’s official national identity. Several states including Denmark, the Netherlands, the United Kingdom, Switzerland, Sweden, as well as Australia and Canada have been experimenting with establishing the voice, or more precisely language and dialect, to take on the passport’s role of identifying and excluding people.

In the 1990s—not too far from the time of Sneakers release—they started to use a crude form of linguistic analysis, later termed Language Analysis for the Determination of Origin (LADO), as part of the administration of claims to asylum. In cases where people could not provide a form of identity documentation or when those documents would be considered fraudulent or inauthentic, caseworkers would look for this national identity in the languages and dialects of people. LADO analyzes acoustic and phonetic features of recorded speech samples in relation to phonetics, morphology, syntax, and lexicon, as well as intonation and pronunciation.

The problems and assumptions of this linguistic analysis are multiple as pointed out and critiqued by linguists. 1) it falsely ties language to territorial and geopolitical boundaries and assumes that language is intimately tied to a place of origin according to a language ideology that maps linguistic boundaries onto geographical boundaries. Nation-state borders on the African continent and in the Middle East were drawn by colonial powers without considerations of linguistic communities. 2) LADO thinks of language and dialect as static, monoglossic and a stable index of identity. These assumptions produce the idea of a linguistic passport in which language is supposed to function as a form of official state identification that distributes possibilities and impossibilities of movement and mobility. As a result, the voice becomes a passport and it simultaneously functions as a border, by inscribing language into territoriality. As Lawrence Abu Hamdan has written and shown through his sound art work The Freedom of Speech itself, LADO functions to control territory, produce national space, and attempts to establish a correlation between voice and citizenship.

I’ll add that the very idea of a passport has a history rooted in forms of colonial governance and population control and the modern nation-state and territorial borders. The body is intimately tied to the history of passports and biometrics. For example, German colonial administrators in South-West Africa, present day Namibia, and German overseas colony from 1884 to 1919 instituted a pass batch system to control the mobility of Indigenous people, create an exploitable labor force, and institute and reinforce white supremacy and colonial exploitation. Media and Black Studies scholar Simone Browne describes biometrics as “digital epidermalization,” to describe how surveillance becomes inscribed and encoded on the skin. Now, it’s coming for the voice too.

In 2016 the German government took LADO a step further and started to use what they call a voice biometric software that supposedly identifies the place of origin of people who are seeking asylum. Someone’s spoken dialect is supposedly recognized and verified on the basis of speech recordings with an average lengths of 25,7 seconds by a software employed by the German Ministry for Migration and Refugees (in German abbreviated as BAMF). The now used dialect recognition software used by German asylum administrators distinguishes between 4 large Arabic dialect groups: Levantine, Maghreb, Iraqi, Egyptian, and Gulf dialect. Just recently this was expanded with language models for Farsi, Dari and Pashto. There are plans to expand this software usage to other European countries, evidenced by BAMF traveling to other countries to demonstrate their software.

This “branding” of BAMF’s software stands in stark contradiction to its functionality. The software’s error rate is 20 percent. It is based on a speech sample as short as 26 seconds. People are asked to describe pictures while their speech is recorded, the software then indicates a percentage of probability of the spoken dialect and produces a score sheet that could indicate the following: 74% Egyptian, 13% Levantine, 8% Gulf Arabic, 5 % Other. The interpretation of results is left to the caseworkers without clear instructions on how to weigh those percentages against each other. The discretion left to caseworkers makes it more difficult to appeal asylum decisions. According to the Ministry, the results are supposed to give indications and clues about someone’s origin and are not a decision-making tool. However, as I have argued elsewhere, algorithmic or so-called “intelligent” bordering practices assume neutrality and objectivity and thereby conceal forms of discrimination embedded in technologies. In the case of dialect recognition the score sheet’s indicated probabilities produce a seeming objectivity that might sway case-workers in one direction or another. Moreover, the software encodes distinctions between who is deserving of protection and who is not; a feature of asylum and refugee protection regimes critiqued by many working in the field.

The functionality and operations of the software are also intentionally obscured. Research and sound artist Pedro Oliveira addresses the many black-boxed assumptions entering the dialect recognition technology. For instance, in his work Das hätte nicht passieren dürfen he engages with the labor involved in producing sound archives and speech corpora and challenges “ the idea that it might be feasible, for the purposes of biometric assessment, to divorce a sound’s materiality from its constitution as a cultural phenomenon.” Oliveira’s work counters the lack of transparency and accountability of the BAMF software. Information about its functionality is scarce. Freedom of information requests and parliamentary inquiries about the technical and algorithmic properties and training data of the software were denied as the information was classified because “the information can be used to prepare conscious acts of deception in the asylum proceeding and misuse language recognition for manipulation,” the German government argued. While it is not necessarily deepfakes like the one Brandes produced to forego a security system that the German authorities are worried about, the specter of manipulation of the software looms large.

The consequences of the software’s poor functionality can have drastic consequences for asylum decisions. Vice reported in 2018 the story of Hajar, whose name was changed to protect his identity. Hajar’s asylum application in Germany was denied on the basis of a dialect recognition software that supposedly indicated that he was a Turkish speaker and, thus, could not be from the Autonomous Region Kurdistan as he claimed. Hajar who speaks the Kurdish dialect Sorani had been instructed by BAMF to speak into a telephone receiver and describe an image in his first language. The software’s results indicated a 63% probability that Hajar speaks Turkish and the caseworker concluded that Hajar had lied in his asylum hearings about his origin and his reasons to seek asylum in Germany who continued to appeal the asylum decision. The software is not equipped to verify Sorani and should not have been used on Hajar in the first place.

Why the voice? It seems that bureaucrats and caseworkers saw it as a way to identify people with ease and scale language analysis more easily. It is also important to consider the context in which this so-called voice biometry is used. Many people who seek asylum in Germany cannot provide identity documents like passports, birth certificates, or identification cards. This is the case because people cannot take them with them as they flee, they are lost or stolen on people’s journeys, or they are confiscated by traffickers. Many forms of documentation are also not accepted as legitimate by state authorities. Generally, language analysis is used in a hostile political context in which claims to asylum are increasingly treated with suspicion.

The voice as a part of the body was supposed to provide an answer to this administrative problem of states. In response to the long summer of migration in 2015 Germany hired McKinsey to overhaul their administrative processes, save money, accelerate asylum procedures, and make them more “efficient.” In July 2017, the head of the Department for Infrastructure and Information Technology of the German Federal Office for Migration and Refugees hailed the office’s new voice and dialect recognition software as “unrivaled world-wide” in its capacity to determine the region of origin of asylum seekers and to “detect inconsistencies” in narratives about their need for protection. More than identification documents, personal narratives, or other features of the body, the voice, the BAMF expert suggests is the medium that allows for the indisputable verification of migrants’ claims to asylum, ostensibly pinpointing their place of origin.

Voice and dialect recognition technology are established by policy makers and security industries as particularly successful tools to produce authentic evidence about the origin of asylum seekers. Asylum seekers have to sound like being from a region that warrants their claims to asylum: requiring the translation of voices into geographical locations. As a result, automated dialect recognition becomes more valuable than someone’s testimony. In other words, the voice, abstracted into a percentage, becomes the testimony. Here, the software, similarly to other biometric security systems, is framed as more objective, neutral, and efficient way of identifying the country of origin of people as compared to human decision-makers. As the German Migration agency argued in 2017: “The IT supported, automated voice biometric analysis provides an independent, objective and large-scale method for the verification of the indicated origin.”

The use of dialect recognition puts forth an understanding of the voice and language that pinpoints someone’s origin to a certain place, without a doubt and without considering how someone’s movement or history. In this sense, the software inscribes a vision of a sedentary, ahistorical, static, fixed, and abstracted human into its operations. As a result, geographical borders become reinforced and policed as fixed boundaries of territorial sovereignty. This vision of the voice ignores multiple mobilities and (post)colonial histories and reinscribes the borders of nation-states that reproduce racial violence globally. Dialect recognition reproduces precarity for people seeking asylum. As I have shown elsewhere, in the absence of other forms of identification and the presence of generalized suspicion of asylum claims, accent accumulates value while the content of testimony becomes devalued. Asylum applicants are placed in a double bind, simultaneously being incited to speak during asylum procedures and having their testimony scrutinized and placed under general suspicion.

Similar to conventional passports, the linguistic passport also represents a structurally unequal and discriminatory regime that needs to be abolished. The software was framed as providing a technical solution to a political problem that intensifies the violence of borders. We need to shift to pose other questions as well. What do we want to listen to? How could we listen differently? How could we build a world in which nation-states and passports are abolished and the voice is not a passport but can be appreciated in its multiplicity, heteroglossia, and malleability? How do we want to live together on a planet increasingly becoming uninhabitable?

—

Featured Image: Voice Print Sample–Image from US NIST

—

Michelle Pfeifer is postdoctoral fellow in Artificial Intelligence, Emerging Technologies, and Social Change at Technische Universität Dresden in the Chair of Digital Cultures and Societal Change. Their research is located at the intersections of (digital) media technology, migration and border studies, and gender and sexuality studies and explores the role of media technology in the production of legal and political knowledge amidst struggles over mobility and movement(s) in postcolonial Europe. Michelle is writing a book titled Data on the Move Voice, Algorithms, and Asylum in Digital Borderlands that analyses how state classifications of race, origin, and population are reformulated through the digital policing of constant global displacement.

—

REWIND! . . .If you liked this post, you may also dig:

“Hey Google, Talk Like Issa”: Black Voiced Digital Assistants and the Reshaping of Racial Labor–Golden Owens

Beyond the Every Day: Vocal Potential in AI Mediated Communication –Amina Abbas-Nazari

Voice as Ecology: Voice Donation, Materiality, Identity–Steph Ceraso

The Sound of What Becomes Possible: Language Politics and Jesse Chun’s 술래 SULLAE (2020)—Casey Mecija

The Sonic Roots of Surveillance Society: Intimacy, Mobility, and Radio–Kathleen Battles

Acousmatic Surveillance and Big Data–Robin James

Recent Comments