“This AI will heat up any club”: Reggaetón and the Rise of the Cyborg Genre

This series listens to the political, gendered, queer(ed), racial engagements and class entanglements involved in proclaiming out loud: La-TIN-x. ChI-ca-NA. La-TI-ne. ChI-ca-n-@. Xi-can-x. Funded by an Andrew W. Mellon Foundation as part of the Crossing Latinidades Humanities Research Initiative, the Latinx Sound Cultures Studies Working Group critically considers the role of sound and listening in our formation as political subjects. Through both a comparative and cross-regional lens, we invite Latinx Sound Scholars to join us as we dialogue about our place within the larger fields of Chicanx/Latinx Studies and Sound Studies. We are delighted to publish our initial musings with Sounding Out!, a forum that has long prioritized sound from a queered, racial, working-class and “always-from-below” epistemological standpoint. —Ed. Dolores Inés Casillas

—

Busco la colaboración universal donde todos los Benitos puedan llegar a ser Bad Bunny. –FlowGPT, TikTok

In November of 2023, the reggaetón song “DEMO #5: NostalgIA” went viral on various digital platforms, particularly TikTok. The track, posted by user FlowGPT, makes use of artificial intelligence (Inteligencia Artificial) to imitate the voices of Justin Bieber, Bad Bunny, and Daddy Yankee. The song begins with a melody reminiscent of Justin Bieber’s 2015 pop hit “Sorry.” Soon, reggaetón’s characteristic boom-ch-boom-chick drumbeat drops, and the voices of the three artists come together to form a carefully crafted, unprecedented crossover.

Bad Bunny’s catchy verse “sal que te paso a buscar” quickly inundated TikTok feeds as users began to post videos of themselves dancing or lip-syncing to the song. The song was not only very good but it also successfully replicated these artists– their voices, their style, their vibe. Soon, the song exited the bounds of the digital and began to be played in clubs across Latin America, marking a thought-provoking novelty in the usual repertoire of reggaetón hits. In line with the current anxieties around generative AI, the song quickly generated public controversy. Only a few weeks after its release, ‘nostalgIA’ was taken down from most digital platforms.

The mind behind FlowGPT is Chilean producer Maury Senpai, who in a series of TikTok responses explained his mission of creative democratization in a genre that has been historically exclusive of certain creators. In one video, FlowGPT encourages listeners to contemplate the potential of this “algorithm” to allow songs by lesser-known artists and producers to reach the ears of many listeners, by replicating the voices of well-known singers. Maury Senpai’s production process involved lyric writing, extensive study of the singers’ vocals, and the Kits.ai tool.

Therefore, contrary to FlowGPT’s robotic brand, ‘nostalgIA’ was the product of careful collaboration between human and machine– or, what Ross Cole calls “cyborg creativity.” This hybridization enmeshes the artist and the listener, allowing diverse creators their creative desires. Cyborg creativity, of course, is not an inherent result of GenAI’s advent. Instead, I argue that reggaetón has long been embedded in a tradition of musical imitation and a deep reliance on technological tools, which in turn challenges popular concerns about machine-human artistic collaboration.

Many creators worry that GenAI will co-opt a practice that for a long time has been regarded as strictly human. GenAI’s reliance on pre-existing data threatens to hide the labor of artists who contributed to the model’s output. We may also add the inherent biases present in training data. Pasquinelli and Joler propose that the question “Can AI be creative?” be reformulated as “Is machine learning able to create works that are not imitations of the past?” Machine learning models detect patterns and styles in training data and then generate “random improvisation” within this data. Therefore, GenAI tools are not autonomous creative actors but often operate with generous human intervention that trains, monitors, and disseminates the products of these models.

The inability to define GenAI tools as inherently creative on their own does not mean they can’t be valuable for artists seeking to experiment in their work. Hearkening back to Donna Haraway’s concept of the cyborg, Ross Cole argues that

Such [AI] music is in fact a species of hybrid creativity predicated on the enmeshing of people and computers (…) We might, then, begin to see AI not as a threat to subjective expression, but another facet of music’s inherent sociality.

Many authors agree that unoriginal content—works that are essentially reshufflings of existing material—cannot be considered legitimate art. However, an examination of the history of the reggaetón genre invites us to question this idea. In “From Música Negra to Reggaetón Latino,” Wayne Marshall explains how the genre emerged from simultaneous and mutually-reinforcing processes in Panamá, Puerto Rico, and New York, where artists brought together elements of dancehall, reggae, and American hip hop. Towards the turn of the millennium, the genre’s incorporation of diverse musical elements and the availability of digital tools for production favored its commercialization across Latin America and the United States.

The imitation of previous artists has been embedded in the fabric of reggaetón from a very early stage. Some of the earliest examples of reggaetón were in fact Spanish lyrics placed over Jamaican dancehall riddims— instrumental tracks with characteristic melodies. When Spanish-speaking artists began to draw from dancehall, they used these same riddims in their songs, and continue to do so today. A notable example of this pattern is the Bam Bam riddim, which is famously used in the song “Murder She Wrote” by Chaka Demus & Pliers (1992).

This riddim made its way into several reggaetón hits, such as “El Taxi” by Osmani García, Pitbull, and Sensato (2015).

We may also observe reggaetón’s tradition of imitation in frequent references to “old school” artists by the “new school,” through beat sampling, remixes, and features. We see this in Karol G’s recent hit “GATÚBELA,” where she collaborates with Maldy, former member of the iconic Plan B duo.

Reggaetón’s deeply rooted tradition of “tribute-paying” also ties into its differentiation from other genres. As the genre grew in commercial value, perhaps to avoid copyright issues, producers cut down on their direct references to dancehall and instead favored synthesized backings. Marshall quotes DJ El Niño in saying that around the mid-90s, people began to use the term reggaetón to refer to “original beats” that did not solely rely on riddims but also employed synthesizer and sequencer software. In particular, the program Fruity Loops, initially launched in 1997, with “preset” sounds and effects provided producers with a wider set of possibilities for sonic innovation in the genre.

The influence of technology on music does not stop at its production but also seeps into its socialization. Today, listeners increasingly engage with music through AI-generated content. Ironically, following the release of Bad Bunny’s latest album, listeners expressed their discontent through AI-generated memes of his voice. One of the most viral ones consisted of Bad Bunny’s voice singing “en el McDonald’s no venden donas.”

The clip, originally sung by user Don Pollo, was modified using AI to sound like Bad Bunny, and then combined with reggaetón beats and the Bam Bam riddim. Many users referred to this sound as a representation of the light-heartedness they saw lacking in the artist’s new album. While Un Verano Sin Ti (2022) stood out as an upbeat summer album that addressed social issues such as U.S. imperialism and machismo, Nadie Sabe lo que va a Pasar Mañana (2023) consisted mostly of tiraderas or disses against other artists and left some listeners disappointed. In a 2018 post for SO!, Michael S. O’Brien speaks of this sonic meme phenomenon, where a sound and its repetition come to encapsulate collective discontent.

Another notorious case of AI-generated covers targets recent phenomenon Young Miko. As one of the first openly queer artists to break into the urban Latin mainstream, Young Miko filled a long-standing gap in the genre—the need for lyrics sung by a woman to another woman. Her distinctive voice has also been used in viral AI covers of songs such as “La Jeepeta,” and “LALA,” originally sung by male artists. To map Young Miko’s voice over reggaetón songs that advance hypermasculinity– through either a love for Jeeps or not-so-subtle oral sex– represents a creative reclamation of desire where the agent is no longer a man, but a woman. Jay Jolles writes of TikTok’s modifications to music production, namely the prioritization of viral success. The case of AI-generated reggaetón covers demonstrates how catchy reinterpretations of an artist’s work can offer listeners a chance to influence the music they enjoy, allowing them to shape it to their own tastes.

Examining the history of musical imitation and digital innovation in reggaetón expands the bounds of artistry as defined by GenAI theorists. In the conventions of the TikTok platform, listeners have found a way to participate in the artistry of imitation that has long defined the genre. The case of FlowGPT, along with the overwhelmingly positive reception of “nostalgIA,” point towards a future where the boundaries between the listener and the artist are blurred, and where technology and digital spaces are the platforms that allow for an enhanced cyborg creativity to take place.

—

Featured Image: Screenshot from ““en el McDonald’s no venden donas.” Taken by SO!

—

Laurisa Sastoque is a Colombian scholar of digital humanities, history, and storytelling. She works as a Digital Preservation Training Officer at the University of Southampton, where she collaborates with the Digital Humanities Team to promote best practices in digital preservation across Galleries/Gardens, Libraries, Archives, and Museums (GLAM), and other sectors. She completed an MPhil in Digital Humanities from the University of Cambridge as a Gates Cambridge scholar. She holds a B.A. in History, Creative Writing, and Data Science (Minor) from Northwestern University.

—

REWIND!…If you liked this post, you may also dig:

Boom! Boom! Boom!: Banda, Dissident Vibrations, and Sonic Gentrification in Mazatlán—Kristie Valdez-Guillen

Listening to MAGA Politics within US/Mexico’s Lucha Libre –Esther Díaz Martín and Rebeca Rivas

Ronca Realness: Voices that Sound the Sucia Body—Cloe Gentile Reyes

Echoes in Transit: Loudly Waiting at the Paso del Norte Border Region—José Manuel Flores & Dolores Inés Casillas

Experiments in Agent-based Sonic Composition—Andreas Pape

The Cyborg’s Prosody, or Speech AI and the Displacement of Feeling

In summer 2021, sound artist, engineer, musician, and educator Johann Diedrick convened a panel at the intersection of racial bias, listening, and AI technology at Pioneerworks in Brooklyn, NY. Diedrick, 2021 Mozilla Creative Media award recipient and creator of such works as Dark Matters, is currently working on identifying the origins of racial bias in voice interface systems. Dark Matters, according to Squeaky Wheel, “exposes the absence of Black speech in the datasets used to train voice interface systems in consumer artificial intelligence products such as Alexa and Siri. Utilizing 3D modeling, sound, and storytelling, the project challenges our communities to grapple with racism and inequity through speech and the spoken word, and how AI systems underserve Black communities.” And now, he’s working with SO! as guest editor for this series (along with ed-in-chief JS!). It kicked off with Amina Abbas-Nazari’s post, helping us to understand how Speech AI systems operate from a very limiting set of assumptions about the human voice. Then, Golden Owens took a deep historical dive into the racialized sound of servitude in America and how this impacts Intelligent Virtual Assistants. Last week, Michelle Pfeifer explored how some nations are attempting to draw sonic borders, despite the fact that voices are not passports. Today, Dorothy R. Santos wraps up the series with a meditation on what we lose due to the intensified surveilling, tracking, and modulation of our voices. [To read the full series, click here] –JS

—

In 2010, science fiction writer Charles Yu wrote a story titled “Standard Loneliness Package,” where emotions are outsourced to another human being. While Yu’s story is a literal depiction, albeit fictitious, of what might be entailed and the considerations that need to be made of emotional labor, it was published a year prior to Apple introducing Siri as its official voice assistant for the iPhone. Humans are not meant to be viewed as a type of technology, yet capitalist and neoliberal logics continue to turn to technology as a solution to erase or filter what is least desirable even if that means the literal modification of voice, accent, and language. What do these actions do to the body at risk of severe fragmentation and compartmentalization?

I weep.

I wail.

I gnash my teeth.

Underneath it all, I am smiling. I am giggling.

I am at a funeral. My client’s heart aches, and inside of it is my heart, not aching, the opposite of aching—doing that, whatever it is.

Charles Yu, “Standard Loneliness Package,” Lightspeed: Science Fiction & Fantasy, November 2010

Yu sets the scene by providing specific examples of feelings of pain and loss that might be handed off to an agent who absorbs the feelings. He shows us, in one way, what a world might look and feel like if we were to go to the extreme of eradicating and off loading our most vulnerable moments to an agent or technician meant to take on this labor. Although written well over a decade ago, its prescient take on the future of feelings wasn’t too far off from where we find ourselves in 2023. How does the voice play into these connections between Yu’s story and what we’re facing in the technological age of voice recognition, speech synthesis, and assistive technologies? How might we re-imagine having the choice to displace our burdens onto another being or entity? Taking a cue from Yu’s story, technologies are being created that pull at the heartstrings of our memories and nostalgia. Yet what happens when we are thrust into a perpetual state of grieving and loss?

Humans are made to forget. Unlike a computer, we are fed information required for our survival. When it comes to language and expression, it is often a stochastic process of figuring out for whom we speak and who is on the receiving end of our communication and speech. Artist and scholar Fabiola Hanna believes polyvocality necessitates an active and engaged listener, which then produces our memories. Machines have become the listeners to our sonic landscapes as well as capturers, surveyors, and documents of our utterances.

The past few years may have been a remarkable advancement in voice tech with companies such as Amazon and Sanas AI, a voice recognition platform that allows a user to apply a vocal filter onto any human voice, with a discernible accent, that transforms the speech into Standard American English. Yet their hopes for accent elimination and voice mimicry foreshadow a future of design without justice and software development sans cultural and societal considerations, something I work through in my artwork in progress, The Cyborg’s Prosody (2022-present).

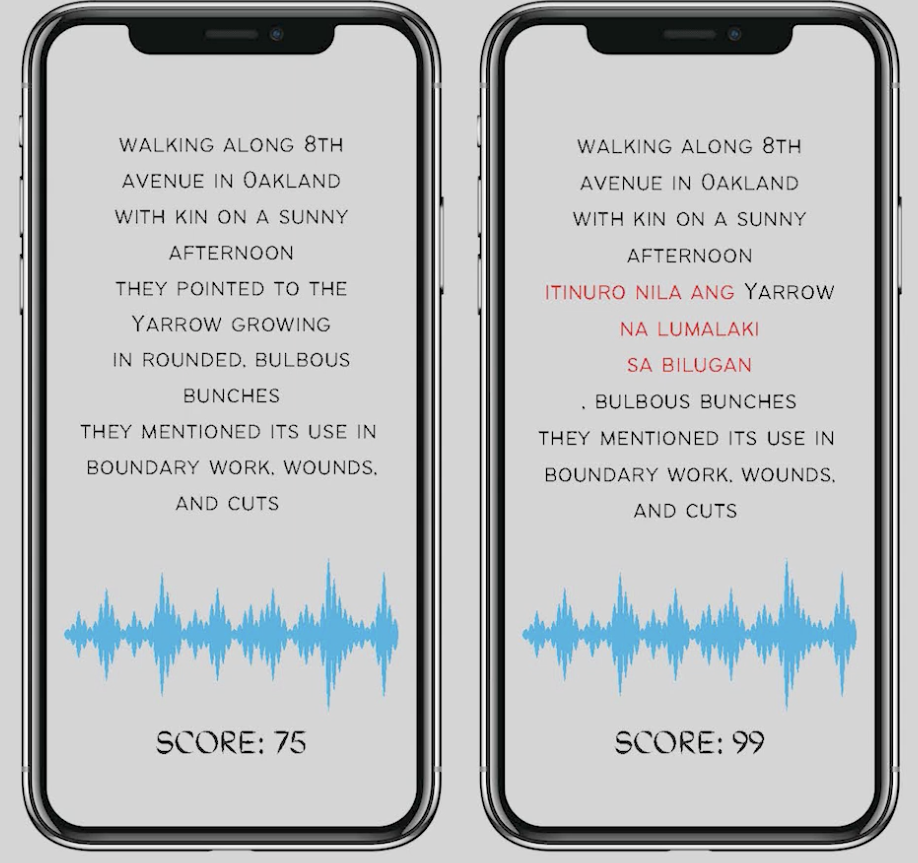

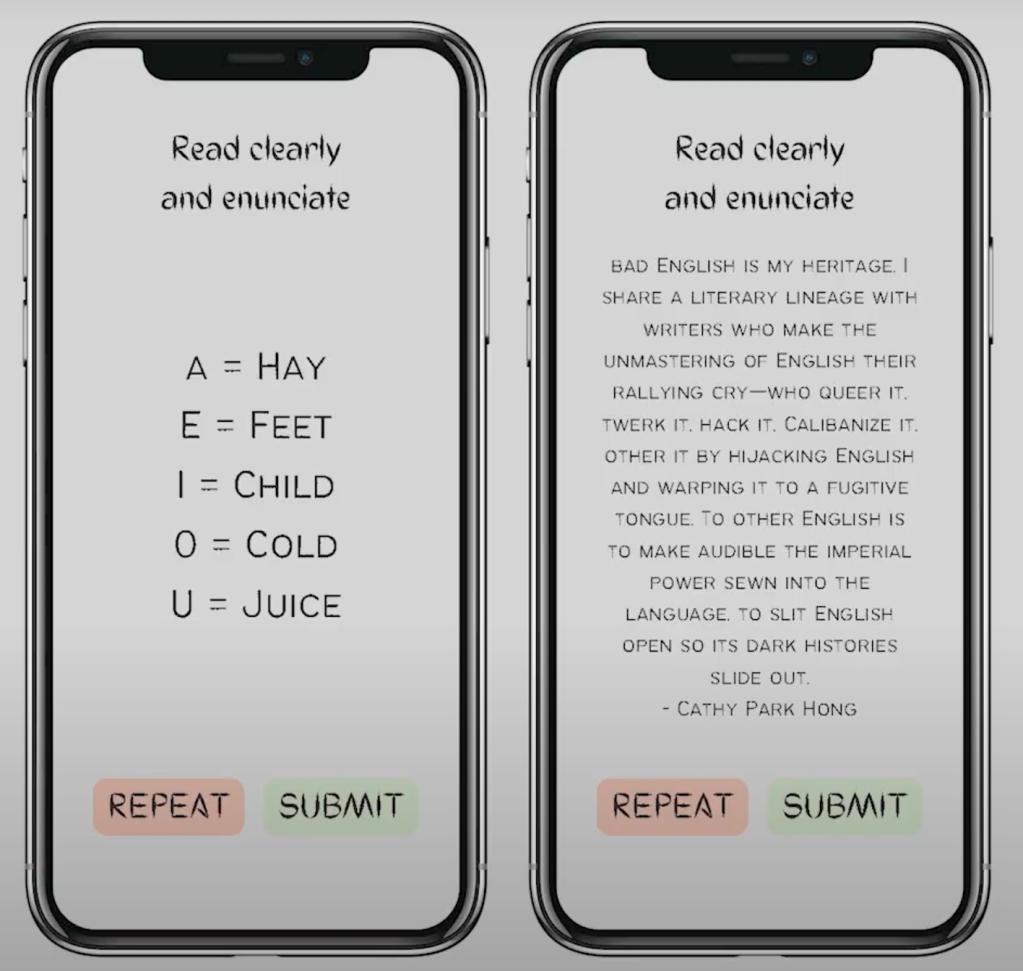

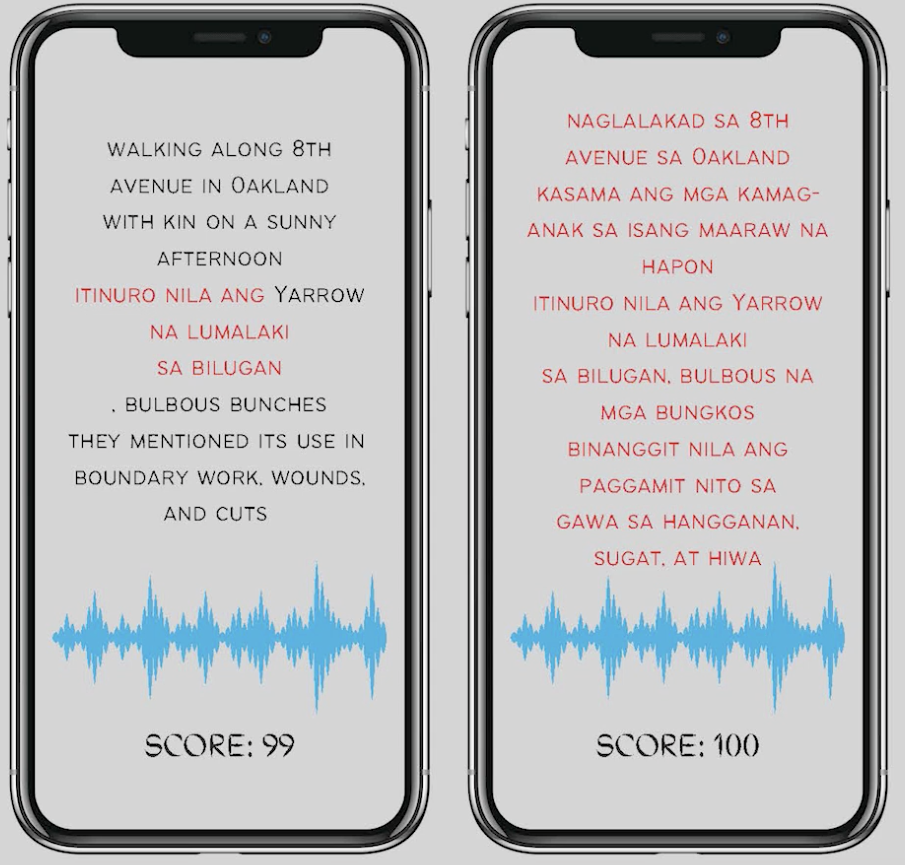

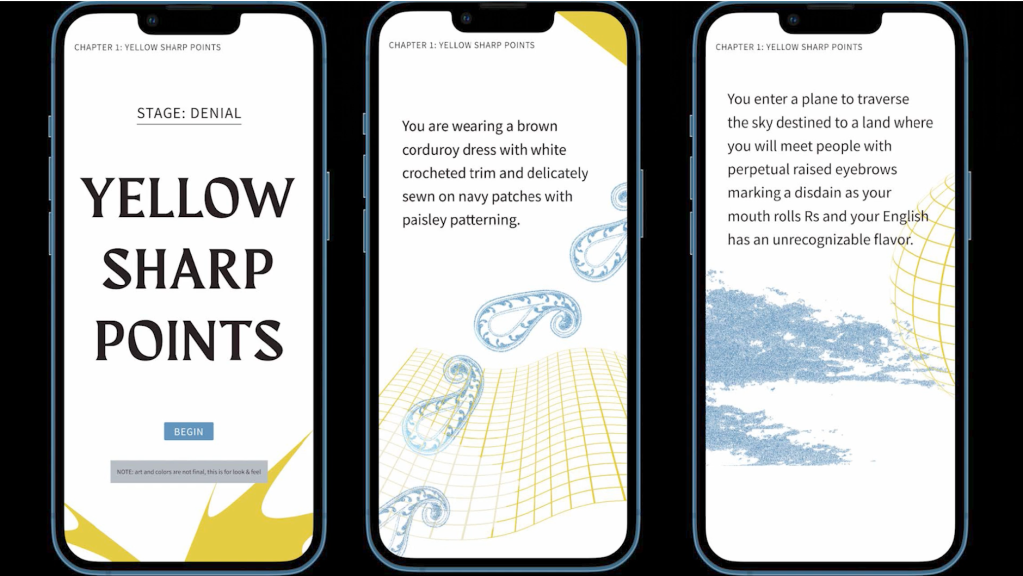

The Cyborg’s Prosody is an interactive web-based artwork (optimized for mobile) that requires participants to read five vignettes that increasingly incorporate Tagalog words and phrases that must be repeated by the player. The work serves as a type of parody, as an “accent induction school” — providing a decolonial method of exploring how language and accents are learned and preserved. The work is a response to the creation of accent reduction schools and coaches in the Philippines. Originally, the work was meant to be a satire and parody of these types of services, but shifted into a docu-poetic work of my mother’s immigration story and learning and becoming fluent in American English.

Even though English is a compulsory language in the Philippines, it is a language learned within the parameters of an educational institution and not common speech outside of schools and businesses. From the call center agents hired at Vox Elite, a BPO company based in the Philippines, to a Filipino immigrant navigating her way through a new environment, the embodiment of language became apparent throughout the stages of research and the creative interventions of the past few years.

In Fall 2022, I gave an artist talk about The Cyborg’s Prosody to a room of predominantly older, white, cisgender male engineers and computer scientists. Apparently, my work caused a stir in one of the conversations between a small group of attendees. A couple of the engineers chose to not address me directly, but I overheard a debate between guests with one of the engineers asking, “What is her project supposed to teach me about prosody? What does mimicking her mom teach me?” He became offended by the prospect of a work that de-centered his language, accent, and what was most familiar to him.The Cyborg’s Prosody is a reversal of what is perceived as a foreign accented voice in the United States into a performance for both the cyborg and the player. I introduce the term western vocal drag to convey the caricature of gender through drag performance, which is apropos and akin to the vocal affect many non-western speakers effectuate in their speech.

The concept of western vocal drag became a way for me to understand and contemplate the ways that language becomes performative through its embodiment. Whether it is learning American vernacular to the complex tenses that give meaning to speech acts, there is always a failure or queering of language when a particular affect and accent is emphasized in one’s speech. The delivery of speech acts is contingent upon setting, cultural context, and whether or not there is a type of transaction occurring between the speaker and listener. In terms of enhancement of speech and accent to conform to a dominant language in the workplace and in relation to global linguistic capitalism, scholar Vijay A. Ramjattan states in that there is no such thing as accent elimination or even reduction. Rather, an accent is modified. The stakes are high when taking into consideration the marketing and branding of software such as Sanas AI that proposes an erasure of non-dominant foreign accented voices.

The biggest fear related to the use of artificial intelligence within voice recognition and speech technologies is the return to a Standard American English (and accent) preferred by a general public that ceases to address, acknowledge, and care about linguistic diversity and inclusion. The technology itself has been marketed as a way for corporations and the BPO companies they hire to mind the mental health of the call center agents subjected to racism and xenophobia just by the mere sound of their voice and accent. The challenge, moving forward, is reversing the need to serve the western world.

A transorality or vocality presents itself when thinking about scholar April Baker-Bell’s work Black Linguistic Consciousness. When Black youth are taught and required to speak with what is considered Standard American English, this presents a type of disciplining that perpetuates raciolinguistic ideologies of what is acceptable speech. Baker-Bell focuses on an antiracist linguistic pedagogy where Black youth are encouraged to express themselves as a shift towards understanding linguistic bias. Deeply inspired by her scholarship, I started to wonder about the process for working on how to begin framing language learning in terms of a multi-consciousness that includes cultural context and affect as a way to bridge gaps in understanding.

Or, let’s re-think this concept or idea that a bad version of English exists. As Cathy Park Hong brilliantly states, “Bad English is my heritage…To other English is to make audible the imperial power sewn into the language, to slit English open so its dark histories slide out.” It is necessary for us all to reconfigure our perceptions of how we listen and communicate that perpetuates seeking familiarity and agreement, but encourages respecting and honoring our differences.

—

Featured Image: Still from artist’s mock-up of The Cyborg’s Prosody(2022-present), copyright Dorothy R. Santos

—

Dorothy R. Santos, Ph.D. (she/they) is a Filipino American storyteller, poet, artist, and scholar whose academic and research interests include feminist media histories, critical medical anthropology, computational media, technology, race, and ethics. She has her Ph.D. in Film and Digital Media with a designated emphasis in Computational Media from the University of California, Santa Cruz and was a Eugene V. Cota-Robles fellow. She received her Master’s degree in Visual and Critical Studies at the California College of the Arts and holds Bachelor’s degrees in Philosophy and Psychology from the University of San Francisco. Her work has been exhibited at Ars Electronica, Rewire Festival, Fort Mason Center for Arts & Culture, Yerba Buena Center for the Arts, and the GLBT Historical Society.

Her writing appears in art21, Art in America, Ars Technica, Hyperallergic, Rhizome, Slate, and Vice Motherboard. Her essay “Materiality to Machines: Manufacturing the Organic and Hypotheses for Future Imaginings,” was published in The Routledge Companion to Biology in Art and Architecture. She is a co-founder of REFRESH, a politically-engaged art and curatorial collective and serves as a member of the Board of Directors for the Processing Foundation. In 2022, she received the Mozilla Creative Media Award for her interactive, docu-poetics work The Cyborg’s Prosody (2022). She serves as an advisory board member for POWRPLNT, slash arts, and House of Alegria.

—

REWIND! . . .If you liked this post, you may also dig:

Your Voice is (Not) Your Passport—Michelle Pfeifer

“Hey Google, Talk Like Issa”: Black Voiced Digital Assistants and the Reshaping of Racial Labor–Golden Owens

Beyond the Every Day: Vocal Potential in AI Mediated Communication –Amina Abbas-Nazari

Voice as Ecology: Voice Donation, Materiality, Identity–Steph Ceraso

The Sound of What Becomes Possible: Language Politics and Jesse Chun’s 술래 SULLAE (2020)—Casey Mecija

Look Who’s Talking, Y’all: Dr. Phil, Vocal Accent and the Politics of Sounding White–Christie Zwahlen

Listening to Modern Family’s Accent–Inés Casillas and Sebastian Ferrada

Recent Comments