The Cyborg’s Prosody, or Speech AI and the Displacement of Feeling

In summer 2021, sound artist, engineer, musician, and educator Johann Diedrick convened a panel at the intersection of racial bias, listening, and AI technology at Pioneerworks in Brooklyn, NY. Diedrick, 2021 Mozilla Creative Media award recipient and creator of such works as Dark Matters, is currently working on identifying the origins of racial bias in voice interface systems. Dark Matters, according to Squeaky Wheel, “exposes the absence of Black speech in the datasets used to train voice interface systems in consumer artificial intelligence products such as Alexa and Siri. Utilizing 3D modeling, sound, and storytelling, the project challenges our communities to grapple with racism and inequity through speech and the spoken word, and how AI systems underserve Black communities.” And now, he’s working with SO! as guest editor for this series (along with ed-in-chief JS!). It kicked off with Amina Abbas-Nazari’s post, helping us to understand how Speech AI systems operate from a very limiting set of assumptions about the human voice. Then, Golden Owens took a deep historical dive into the racialized sound of servitude in America and how this impacts Intelligent Virtual Assistants. Last week, Michelle Pfeifer explored how some nations are attempting to draw sonic borders, despite the fact that voices are not passports. Today, Dorothy R. Santos wraps up the series with a meditation on what we lose due to the intensified surveilling, tracking, and modulation of our voices. [To read the full series, click here] –JS

—

In 2010, science fiction writer Charles Yu wrote a story titled “Standard Loneliness Package,” where emotions are outsourced to another human being. While Yu’s story is a literal depiction, albeit fictitious, of what might be entailed and the considerations that need to be made of emotional labor, it was published a year prior to Apple introducing Siri as its official voice assistant for the iPhone. Humans are not meant to be viewed as a type of technology, yet capitalist and neoliberal logics continue to turn to technology as a solution to erase or filter what is least desirable even if that means the literal modification of voice, accent, and language. What do these actions do to the body at risk of severe fragmentation and compartmentalization?

I weep.

I wail.

I gnash my teeth.

Underneath it all, I am smiling. I am giggling.

I am at a funeral. My client’s heart aches, and inside of it is my heart, not aching, the opposite of aching—doing that, whatever it is.

Charles Yu, “Standard Loneliness Package,” Lightspeed: Science Fiction & Fantasy, November 2010

Yu sets the scene by providing specific examples of feelings of pain and loss that might be handed off to an agent who absorbs the feelings. He shows us, in one way, what a world might look and feel like if we were to go to the extreme of eradicating and off loading our most vulnerable moments to an agent or technician meant to take on this labor. Although written well over a decade ago, its prescient take on the future of feelings wasn’t too far off from where we find ourselves in 2023. How does the voice play into these connections between Yu’s story and what we’re facing in the technological age of voice recognition, speech synthesis, and assistive technologies? How might we re-imagine having the choice to displace our burdens onto another being or entity? Taking a cue from Yu’s story, technologies are being created that pull at the heartstrings of our memories and nostalgia. Yet what happens when we are thrust into a perpetual state of grieving and loss?

Humans are made to forget. Unlike a computer, we are fed information required for our survival. When it comes to language and expression, it is often a stochastic process of figuring out for whom we speak and who is on the receiving end of our communication and speech. Artist and scholar Fabiola Hanna believes polyvocality necessitates an active and engaged listener, which then produces our memories. Machines have become the listeners to our sonic landscapes as well as capturers, surveyors, and documents of our utterances.

The past few years may have been a remarkable advancement in voice tech with companies such as Amazon and Sanas AI, a voice recognition platform that allows a user to apply a vocal filter onto any human voice, with a discernible accent, that transforms the speech into Standard American English. Yet their hopes for accent elimination and voice mimicry foreshadow a future of design without justice and software development sans cultural and societal considerations, something I work through in my artwork in progress, The Cyborg’s Prosody (2022-present).

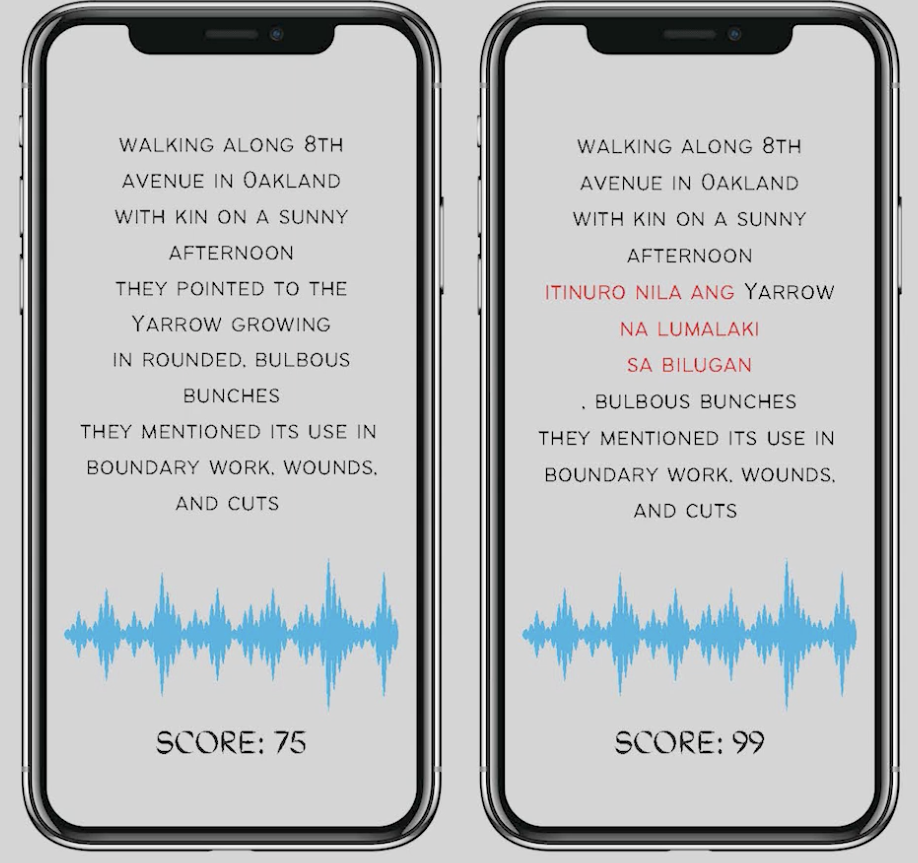

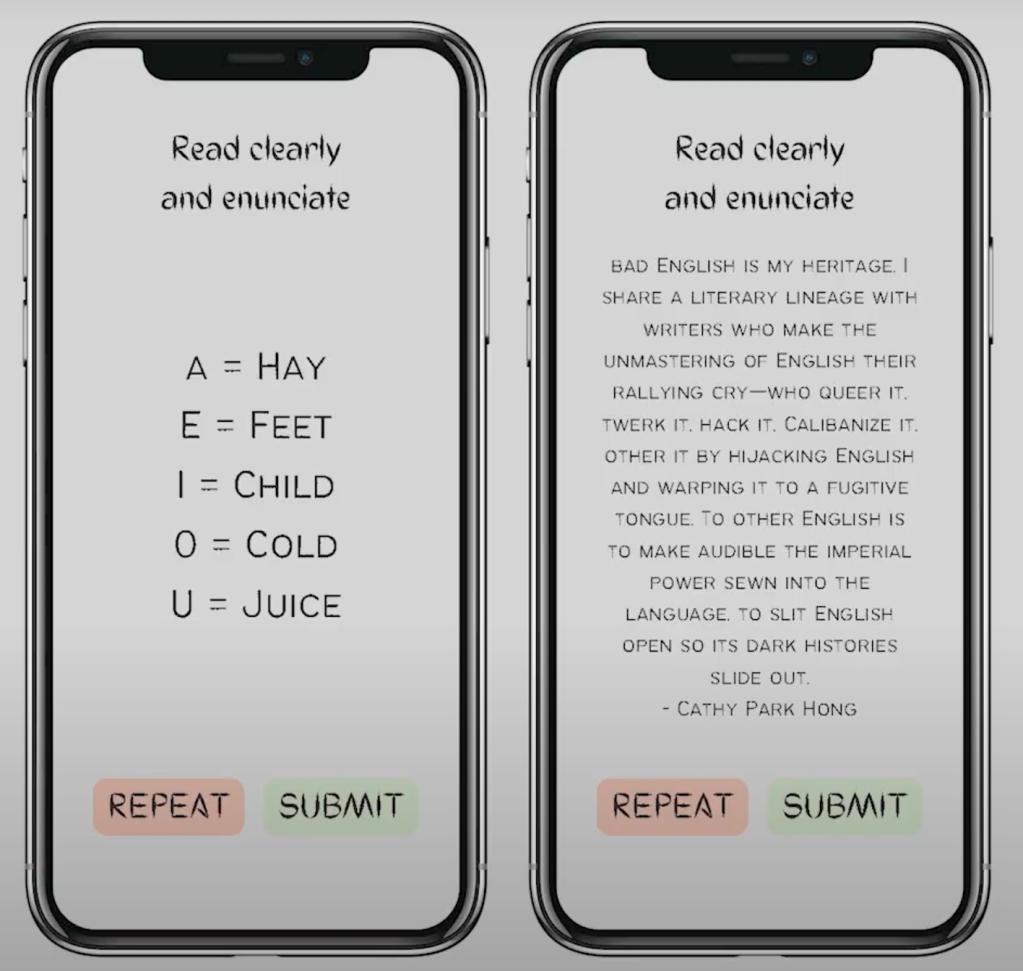

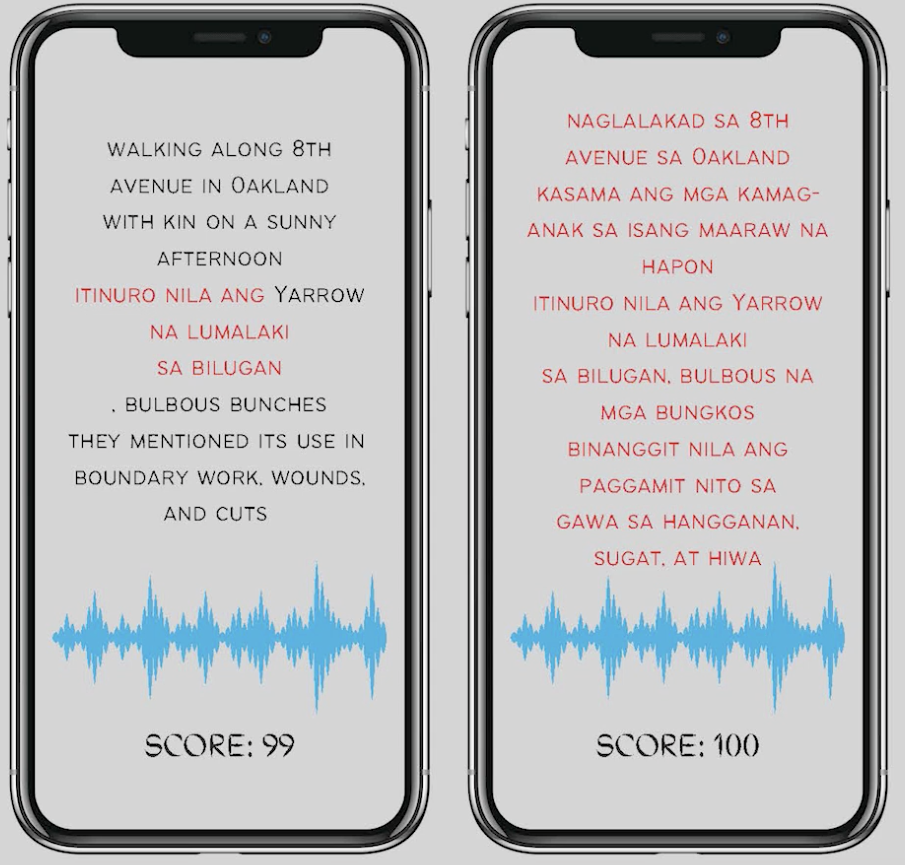

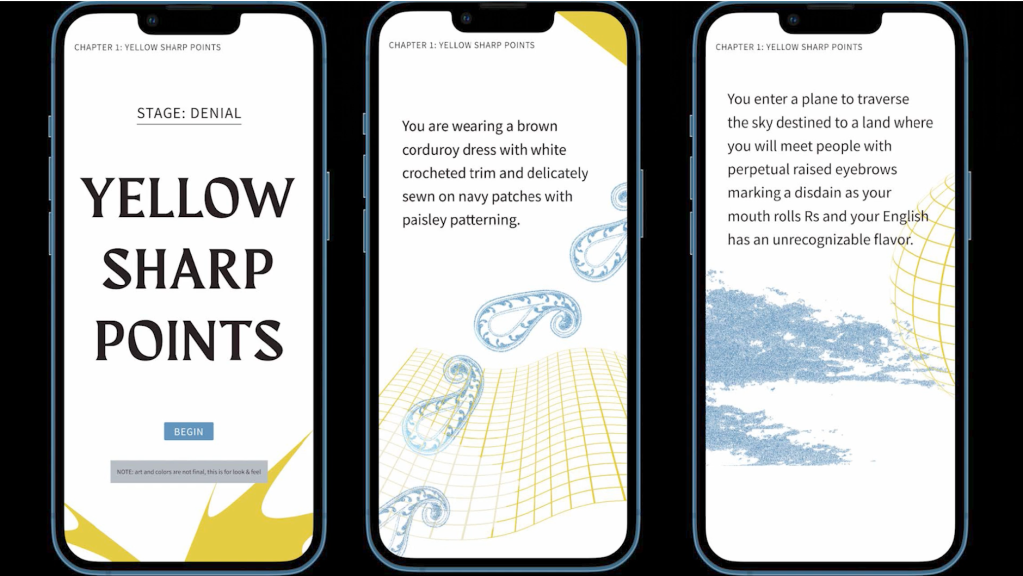

The Cyborg’s Prosody is an interactive web-based artwork (optimized for mobile) that requires participants to read five vignettes that increasingly incorporate Tagalog words and phrases that must be repeated by the player. The work serves as a type of parody, as an “accent induction school” — providing a decolonial method of exploring how language and accents are learned and preserved. The work is a response to the creation of accent reduction schools and coaches in the Philippines. Originally, the work was meant to be a satire and parody of these types of services, but shifted into a docu-poetic work of my mother’s immigration story and learning and becoming fluent in American English.

Even though English is a compulsory language in the Philippines, it is a language learned within the parameters of an educational institution and not common speech outside of schools and businesses. From the call center agents hired at Vox Elite, a BPO company based in the Philippines, to a Filipino immigrant navigating her way through a new environment, the embodiment of language became apparent throughout the stages of research and the creative interventions of the past few years.

In Fall 2022, I gave an artist talk about The Cyborg’s Prosody to a room of predominantly older, white, cisgender male engineers and computer scientists. Apparently, my work caused a stir in one of the conversations between a small group of attendees. A couple of the engineers chose to not address me directly, but I overheard a debate between guests with one of the engineers asking, “What is her project supposed to teach me about prosody? What does mimicking her mom teach me?” He became offended by the prospect of a work that de-centered his language, accent, and what was most familiar to him.The Cyborg’s Prosody is a reversal of what is perceived as a foreign accented voice in the United States into a performance for both the cyborg and the player. I introduce the term western vocal drag to convey the caricature of gender through drag performance, which is apropos and akin to the vocal affect many non-western speakers effectuate in their speech.

The concept of western vocal drag became a way for me to understand and contemplate the ways that language becomes performative through its embodiment. Whether it is learning American vernacular to the complex tenses that give meaning to speech acts, there is always a failure or queering of language when a particular affect and accent is emphasized in one’s speech. The delivery of speech acts is contingent upon setting, cultural context, and whether or not there is a type of transaction occurring between the speaker and listener. In terms of enhancement of speech and accent to conform to a dominant language in the workplace and in relation to global linguistic capitalism, scholar Vijay A. Ramjattan states in that there is no such thing as accent elimination or even reduction. Rather, an accent is modified. The stakes are high when taking into consideration the marketing and branding of software such as Sanas AI that proposes an erasure of non-dominant foreign accented voices.

The biggest fear related to the use of artificial intelligence within voice recognition and speech technologies is the return to a Standard American English (and accent) preferred by a general public that ceases to address, acknowledge, and care about linguistic diversity and inclusion. The technology itself has been marketed as a way for corporations and the BPO companies they hire to mind the mental health of the call center agents subjected to racism and xenophobia just by the mere sound of their voice and accent. The challenge, moving forward, is reversing the need to serve the western world.

A transorality or vocality presents itself when thinking about scholar April Baker-Bell’s work Black Linguistic Consciousness. When Black youth are taught and required to speak with what is considered Standard American English, this presents a type of disciplining that perpetuates raciolinguistic ideologies of what is acceptable speech. Baker-Bell focuses on an antiracist linguistic pedagogy where Black youth are encouraged to express themselves as a shift towards understanding linguistic bias. Deeply inspired by her scholarship, I started to wonder about the process for working on how to begin framing language learning in terms of a multi-consciousness that includes cultural context and affect as a way to bridge gaps in understanding.

Or, let’s re-think this concept or idea that a bad version of English exists. As Cathy Park Hong brilliantly states, “Bad English is my heritage…To other English is to make audible the imperial power sewn into the language, to slit English open so its dark histories slide out.” It is necessary for us all to reconfigure our perceptions of how we listen and communicate that perpetuates seeking familiarity and agreement, but encourages respecting and honoring our differences.

—

Featured Image: Still from artist’s mock-up of The Cyborg’s Prosody(2022-present), copyright Dorothy R. Santos

—

Dorothy R. Santos, Ph.D. (she/they) is a Filipino American storyteller, poet, artist, and scholar whose academic and research interests include feminist media histories, critical medical anthropology, computational media, technology, race, and ethics. She has her Ph.D. in Film and Digital Media with a designated emphasis in Computational Media from the University of California, Santa Cruz and was a Eugene V. Cota-Robles fellow. She received her Master’s degree in Visual and Critical Studies at the California College of the Arts and holds Bachelor’s degrees in Philosophy and Psychology from the University of San Francisco. Her work has been exhibited at Ars Electronica, Rewire Festival, Fort Mason Center for Arts & Culture, Yerba Buena Center for the Arts, and the GLBT Historical Society.

Her writing appears in art21, Art in America, Ars Technica, Hyperallergic, Rhizome, Slate, and Vice Motherboard. Her essay “Materiality to Machines: Manufacturing the Organic and Hypotheses for Future Imaginings,” was published in The Routledge Companion to Biology in Art and Architecture. She is a co-founder of REFRESH, a politically-engaged art and curatorial collective and serves as a member of the Board of Directors for the Processing Foundation. In 2022, she received the Mozilla Creative Media Award for her interactive, docu-poetics work The Cyborg’s Prosody (2022). She serves as an advisory board member for POWRPLNT, slash arts, and House of Alegria.

—

REWIND! . . .If you liked this post, you may also dig:

Your Voice is (Not) Your Passport—Michelle Pfeifer

“Hey Google, Talk Like Issa”: Black Voiced Digital Assistants and the Reshaping of Racial Labor–Golden Owens

Beyond the Every Day: Vocal Potential in AI Mediated Communication –Amina Abbas-Nazari

Voice as Ecology: Voice Donation, Materiality, Identity–Steph Ceraso

The Sound of What Becomes Possible: Language Politics and Jesse Chun’s 술래 SULLAE (2020)—Casey Mecija

Look Who’s Talking, Y’all: Dr. Phil, Vocal Accent and the Politics of Sounding White–Christie Zwahlen

Listening to Modern Family’s Accent–Inés Casillas and Sebastian Ferrada

Beyond the Every Day: Vocal Potential in AI Mediated Communication

In summer 2021, sound artist, engineer, musician, and educator Johann Diedrick convened a panel at the intersection of racial bias, listening, and AI technology at Pioneerworks in Brooklyn, NY. Diedrick, 2021 Mozilla Creative Media award recipient and creator of such works as Dark Matters, is currently working on identifying the origins of racial bias in voice interface systems. Dark Matters, according to Squeaky Wheel, “exposes the absence of Black speech in the datasets used to train voice interface systems in consumer artificial intelligence products such as Alexa and Siri. Utilizing 3D modeling, sound, and storytelling, the project challenges our communities to grapple with racism and inequity through speech and the spoken word, and how AI systems underserve Black communities.” And now, he’s working with SO! as guest editor for this series for Sounding Out! (along with ed-in-chief JS!). It starts today, with Amina Abbas-Nazari, helping us to understand how Speech AI systems operate from a very limiting set of assumptions about the human voice– are we training it, or is it actually training us?

—

Hi, good morning. I’m calling in from Bangalore, India.” I’m talking on speakerphone to a man with an obvious Indian accent. He pauses. “Now I have enabled the accent translation,” he says. It’s the same person, but he sounds completely different: loud and slightly nasal, impossible to distinguish from the accents of my friends in Brooklyn.

The AI startup erasing call center worker accents: is it fighting bias – or perpetuating it? (Wilfred Chan, 24 August 2022)

This telephone interaction was recounted in The Guardian reporting on a Silicon Valley tech start-up called Sanas. The company provides AI enabled technology for real-time voice modification for call centre workers voices to sound more “Western”. The company describes this venture as a solution to improve communication between typically American callers and call centre workers, who might be based in countries such as Philippines and India. Meanwhile, research has found that major companies’ AI interactive speech systems exhibit considerable racial imbalance when trying to recognise Black voices compared to white speakers. As a result, in the hopes of being better heard and understood, Google smart speaker users with regional or ethnic American accents relay that they find themselves contorting their mouths to imitate Midwestern American accents.

These instances describe racial biases present in voice interactions with AI enabled and mediated communication systems, whereby sounding ‘Western’ entitles one to more efficient communication, better usability, or increased access to services. This is not a problem specific to AI though. Linguistics researcher John Baugh, writing in 2002, describes how linguistic profiling is known to have resulted in housing being denied to people of colour in the US via telephone interactions. Jennifer Stoever‘s The Sonic Color Line (2016) presents a cultural and political history of the racialized body and how it both informed and was informed by emergent sound technologies. AI mediated communication repeats and reinforces biases that pre-exist the technology itself, but also helping it become even more widely pervasive.

Mozilla’s commendable Common Voice project aims to ‘teach machines how real people speak’ by building an open source, multi-language dataset of voices to improve usability for non-Western speaking or sounding voices. But singer and musicologist, Nina Sun Eidsheim describes how ’a specific voice’s sonic potentiality [in] its execution can exceed imagination’ (7), and voices as having ‘an infinity of unrealised manifestations’ (8) in The Race of Sound (2019). Eidsheim’s sentiments describe a vocal potential, through musicality, that exists beyond ideas of accents and dialects, and vocal markers of categorised identity. As a practicing vocal performer, I recognise and resonate with Eidsheim’s ideas I have a particular interest in extended and experimental vocality, especially gained through my time singing with Musarc Choir and working with artist Fani Parali. In these instances, I have experienced the pleasurable challenge of being asked to vocalise the mythical, animal, imagined, alien and otherworldly edges of the sonic sphere, to explore complex relations between bodies, ecologies, space and time, illuminated through vocal expression.

Following from Eidsheim, and through my own vocal practice, I believe AI’s prerequisite of voices as “fixed, extractable, and measurable ‘sound object[s]’ located within the body” is over-simplistic and reductive. Voices, within systems of AI, are made to seem only as computable delineations of person, personality and identity, constrained to standardised stereotypes. By highlighting vocal potential, I offer a unique critique of the way voices are currently comprehended in AI recognition systems. When we appreciate the voice beyond the homogenous, we give it authority and autonomy, ultimately leading to a fuller understanding of the voice and its sounding capabilities.

My current PhD research, Speculative Voicing, applies thinking about the voice from a musical perspective to the sound and sounding of voices in artificially intelligent conversational systems. Herby the voice becomes an instrument of the body to explore its sonic materiality, vocal potential and extremities of expression, rather than being comprehended in conjunction to vocal markers of identity aligning to categories of race, gender, age, etc. In turn, this opens space for the voice to be understood as a shapeshifting, morphing and malleable entity, with immense sounding potential beyond what might be considered ordinary or everyday speech. Over the long term this provides discussion of how experimenting with vocal potential may illuminate more diverse perspectives about our sense of self and being in relation to vocal sounding.

Vocal and movement artist Elaine Mitchener exhibits the disillusion of the voice as ‘fixed’ perfectly in her performance of Christian Marclay’s No!, which I attended one hot summer’s evening at the London Contemporary Music Festival in 2022. Marclay’s graphic score uses cut outs from comic book strips to direct the performer to vocalise a myriad of ‘No”s.

Mitchener’s rendering of the piece involved the cooperation and coordination of her entire body, carefully crafting lips, teeth, tongue, muscles and ligaments to construct each iteration of ‘No.’ Each transmutation of Mitchener’s ‘No’s’ came with a distinct meaning, context, and significance, contained within the vocalisation of this one simple syllable. Every utterance explored a new vocal potential, enabled by her body alone. In the context of AI mediated communication, we can see this way of working with the voice renders the idea of the voice as ‘fixed’ as redundant. Mitchener’s vocal potential demonstrates that voices can and do exist beyond AI’s prescribed comprehension of vocal sounding.

In order to further understand how AI transcribes understandings of voice onto notions of identity, and vocal potential, I produced the practice project Polyphonic Embodiment(s) as part of my PhD research, in collaboration with Nestor Pestana, with AI development by Sitraka Rakotoniaina. The AI we created for this project is based upon a speech-to-face recognition AI that aims to be able to tell what your face looks like from the sound of your voice. The prospective impact of this AI is deeply unsettling, as its intended applications are wide-ranging – from entertainment to security, and as previously described AI recognition systems are inherently biased.

This multi-modal form of comprehending voice is also a hot topic of research being conducted by major research institutions including Oxford University and Massachusetts Institute of Technology. We wanted to explore this AI recognition programme in conjunction with an understanding of vocal potential and the voice as a sonic material shaped by the body. As the project title suggests, the work invites people to consider the multi-dimensional nature of voice and vocal identity from an embodied standpoint. Additionally, it calls for contemplation of the relationships between voice and identity, and individuals having multiple or evolving versions of identity. The collaboration with the custom-made AI software creates a feedback loop to reflect on how peoples’ vocal sounding is “seen” by AI, to contest the way voices are currently heard, comprehended and utilised by AI, and indeed the AI industry.

The video documentation for this project shows ‘facial’ images produced by the voice-to-face recognition AI, when activated by my voice, modified with simple DIY voice devices. Each new voice variation, created by each device, produces a different outputted face image. Some images perhaps resemble my face? (e.g. Device #8) some might be considered more masculine? (e.g. Device #10) and some are just disconcerting (e.g. Device #4). The speculative nature of Polyphonic Embodiment(s) is not to suggest that people should modify their voices in interaction with AI communication systems. Rather the simple devices work with bodily architecture and exaggerate its materiality, considering it as a flexible instrument to explore vocal potential. In turn this sheds light on the normative assumptions contained within AI’s readings of voice and its relationships to facial image and identity construction.

Through this artistic, practice-led research I hope to evolve and augment discussion around how the sounding of voices is comprehended by different disciplines of research. Taking a standpoint from music and design practice, I believe this can contest ways of working in the realms of AI mediated communication and shape the ways we understand notions of (vocal) identity: as complex, fluid, malleable, and ultimately not reducible to Western logics of sounding.

—

Featured Image: Still image from Polyphonic Embodiments, courtesy of author.

—

Amina Abbas-Nazari is a practicing speculative designer, researcher, and vocal performer. Amina has researched the voice in conjunction with emerging technology, through practice, since 2008 and is now completing a PhD in the School of Communication at the Royal College of Art, focusing on the sound and sounding of voices in artificially intelligent conversational systems. She has presented her work at the London Design Festival, Design Museum, Barbican Centre, V&A, Milan Furniture Fair, Venice Architecture Biennial, Critical Media Lab, Switzerland, Litost Gallery, Prague and Harvard University, America. She has performed internationally with choirs and regularly collaborates with artists as an experimental vocalist

—

REWIND! . . .If you liked this post, you may also dig:

What is a Voice?–Alexis Deighton MacIntyre

Voice as Ecology: Voice Donation, Materiality, Identity–-Steph Ceraso

Mr. and Mrs. Talking Machine: The Euphonia, the Phonograph, and the Gendering of Nineteenth Century Mechanical Speech – J. Martin Vest

One Scream is All it Takes: Voice Activated Personal Safety, Audio Surveillance, and Gender Violence—María Edurne Zuazu

Echo and the Chorus of Female Machines—AO Roberts

On Sound and Pleasure: Meditations on the Human Voice– Yvon Bonefant

Recent Comments